Facial Action Coding System (FACS)

Although SCAnR considers all six channels, there is an abundance of information we can discover by just looking at the face and analyzing its muscle movements. This is best done through the Facial Action Coding System (FACS).

What is FACS?

The Facial Action Coding System (FACS) is the most widely acknowledged, comprehensive, and credible system of measuring visually discernable facial movements. It was developed by psychologists Paul Ekman and Wallace V. Friesen with the intention of objectively measuring facial expressions for behavioral science investigations (Ekman, Friesen, 1978; Ekman, Friesen, Hager, 2002).

Before the development of FACS, a standard system for facial measurement was nonexistent; this was highly problematic in collecting reliable scientific data within the field.

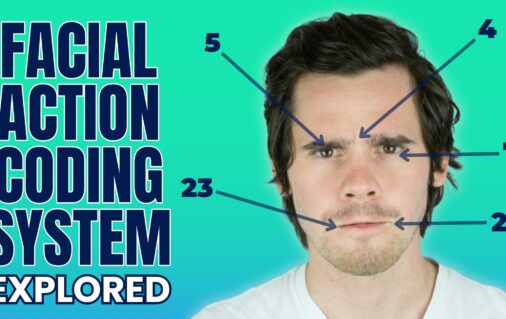

FACS is anatomically based and is comprised of 44 distinct Action Units (AUs), which correspond to each motion of the face, along with several sets of head and eye movements and positions. There are numeric codes for each AU to identify the muscle or muscle groups being contracted or relaxed, which create the changes in appearance that are observed, providing the ability to analyze minuet facial motions. The intensity of each facial action AU is documented on a five-point intensity scale. Events are single facial expressions that can be coded in their entirety, having been deconstructed into an AU based description, which can consist of a combination of Action Units or a single Action Unit.

Although FACS is based on muscle movement, there is not a direct correspondence between muscles and AUs. Each AU is related to specific single or groups of muscles, however since a single muscle may act differently and create an array of changes in appearance, AU codes refers to how the muscle moves to create the specific facial change.

While there are not an extensive number of AUs used in FACS, there are over 7,000 distinct AU combinations that have been observed (Ekman & Scherer, 1982). FACS measures facial movement through frame-by- frame analysis of a video recorded or photographed facial events.

Through the descriptive power of FACS, each detail of an expression can be thoroughly identified and categorized by a trained FACS coder to reveal the specific AUs that produced the expression.

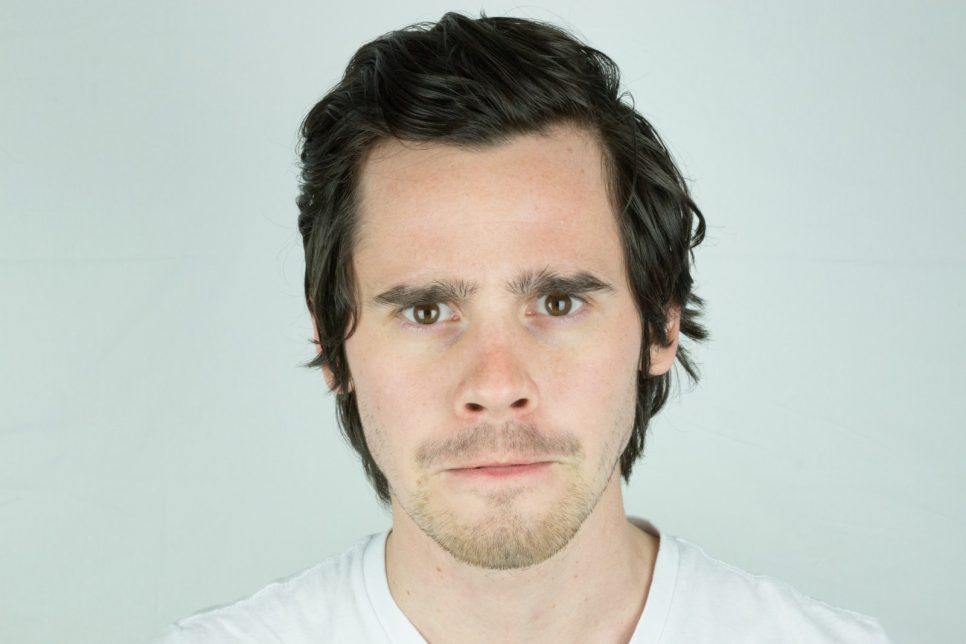

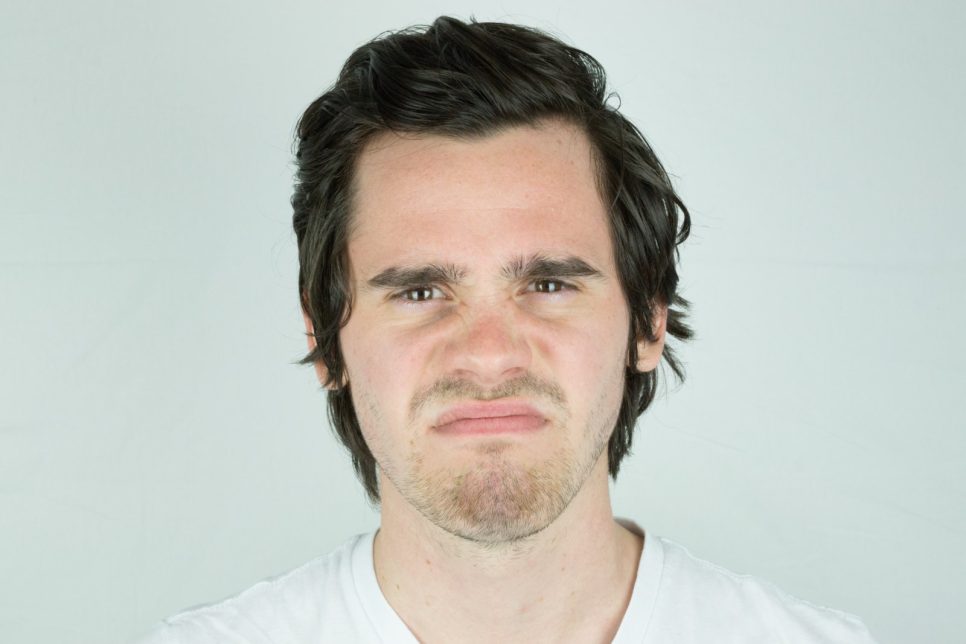

Below are the FACS codes for each universal facial expression. The * symbol shows the muscle movements that are the most reliable, meaning that they are the hardest muscle to flex voluntarily or to fake an expression of. Each number is the code of an Action Unit (AU), these numbers represent muscle movements (individual muscles and muscle groups).

(add images for each)

Happiness: 6* + 12

Anger: 4 + 5 + 7 + 23*

Disgust: 9 + 10* + 17

Surprise: 1 + 2 + 5 + 25 + 26/27

Fear: 1* + 2* + 4* + 5 + 7 + 20 + 25 + 26

Sadness: 1* + 4 + 15*

Contempt: R14*

FACS Research

Facial expression analysis using FACS demonstrates how we can learn about someone’s emotional state, cognition, personal disposition, honesty, and psychopathology by analyzing facial expressions.

FACS can help us find patterns related with dishonesty with an accuracy of 80% in deception detection. FACS has been utilized in medical studies to distinguish between genuine and fabricated pain expressions. FACS has also been used to distinguish the facial movements related with intoxication, which could be useful in the real world, such as in law enforcement.

FACS has proven to be extremely beneficial in the field of mental health. It has been used to identify different facial cues that distinguish suicidal depressed people from those who are not suicidal. FACS has also been shown to predict the start or remission of depression, schizophrenia, and other psychopathologies, and it has been used to determine how well someone is coping after a devastating loss.

FACS has been used to detect physiological abnormalities such as cardiovascular disorders; facial expression analysis studies have predicted transitory myocardial ischemia in coronary patients.

These outstanding research accomplishments utilizing FACS demonstrate the depth of information that may be uncovered by analyzing the facial expressions and facial movements (e.g., Ekman et al., 2001; Bartlett et al., 1999; Ekman, O’Sullivan, Friesen, Scherer, 1991; Frank & Ekman, 1997; Craig, Hyde, Patrick, 1991; Heller, Haynal, 1994; Bonano, Keltner, 1997; Ekman, Rosenberg, 1997; Babyak et al., 2001).

FACS Uses

Since FACS was first developed, it has primarily been used in deception detection, security, and research, but in recent years it is being used in the arts.

The facial expression analysis tool of FACS is used by Pixar Studios, Apple, Microsoft, Google, Disney, and DreamWorks Animation. FACS has been integrated with computer graphic systems to display the facial muscular movements in animated characters in order to create effective emotional facial reactions (e.g., Toy Story Kanfer, 1997; Rydfalk, 1987; Mase, 1991). The usage of FACS as a creative tool in the fields of animation and graphics for cinema, television, virtual reality, and video games will continue to develop, as its applications are extremely beneficial in these fields.

To develop strong communication, the capacity to analyze human behaviour and see others’ emotions clearly is essential. The possibilities are endless: a better knowledge of emotions can enhance childcare, education, business, professional sports, sales, management, and medicine. As a result, FACS’ future has a lot of room for growth across a variety of industries.

Comparisons

There are several systems of facial movement measurement, each with distinctive advantages and restrictions.

FACS versus MAX

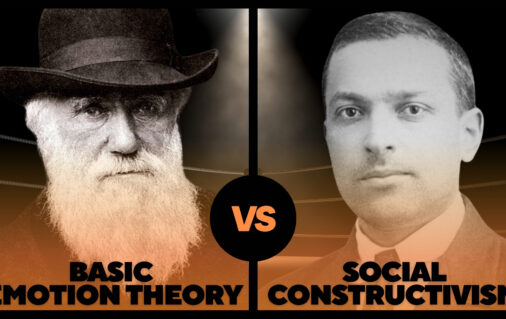

The Facial Action Coding System (FACS) and Maximally Discriminative Facial Coding System (MAX) are systems that code observable facial expressions. The key differences, however, are that FACS was developed upon an anatomical foundation and comprehensively codes facial behavior, while MAX was developed on a theoretical basis and is selective when coding facial behavior.

The primary setback of selective, theoretically derived systems such as MAX, and Ekman, Friesen, & Tomkins’s Facial Affect Scoring Technique (FAST) (1971), are that they can only analyze behaviors that have been hypothesized in advance, limiting the potential for discovery. Izard’s MAX (1979) operates on the basis of a preconceived standard of what areas of the face will change during certain emotional states; it then only regards facial activity that coincides with these standards, the facial expressions that Izard has theorized to be universal.

Therefore, a selective system of measurement has a limited scope, identifying only what the face should do according to a specific theory, rather than the entire capacity of the face. As a system MAX saves time and effort by not coding every facial movement, however it risks missing relevant data.

For instance, if a specific expression is not found when the appropriate stimulus is provided, like when a surprising noise was presented and the person did not show the expected facial expression, it would be hard for us to know if the person didn’t feel the target emotion (surprise), or if the system used was incapable of detecting or interpreting the person’s expression.

A more comprehensive measurement of all muscle movements noticeable on the face is FACS, since it is free from theoretical biases. This offers a method to analyze facial movement in its entirety, presenting researchers with the opportunity to discover new muscular configurations that may be relevant.

FACS versus EMG

Facial Electromyography (EMG) captures muscle activity that may not be obvious to an observer by measuring electrical activity from facial muscles, thus providing data used to deduce muscular contractions. This gives EMG a distinct advantage over observation-based coding systems.

The extensive amount of data that EMG is capable of acquiring is ideal and arguably superior to FACS or MAX, however EMG is highly restricted in its application. A major problem is that electrodes must be placed on the skin of the subject, making anonymity impossible.

Since the person under investigation is aware that their face is being monitored, a secondary group of emotional responses that are unrelated to the research topic, such as anxiety and self-conscious behaviors, can arise. This alone may add a level of inaccuracy to readings or to the subject’s emotional state, which could contaminate the data collected. Additionally, there are significant problems with cross-talk, which refers to the potential interference of surrounding muscle contractions causing misinterpretations in the reading of emotional responses (Kleck et al., 1976).

FACS Interpretations (FACS/EMFACS Dictionary and FACSAID)

FACS/EMFACS is an emotion dictionary computer program that detects specific facial occurrences linked to core expressions of emotion (EM = emotion). The program uses research and theoretically derived data to code spontaneous facial behavior. FACS measures facial movement through frame-by-frame examination of a video recorded facial event, conversely EMFACS requires the coder to view facial activity in real time.

Since EMFACS is essentially a selective and emotion-oriented application of FACS, it records only facial movements that are foundational to specific emotional expressions. However, in more recent years, the FACS/EMFACS dictionary has been replaced with FACSAID (FACS Affect Interpretation Database). This system cross-references facial events with previously recorded studies of parallel emotional events providing a faster and more reliable analysis.

FACS and Technology

The most accurate form of facial measurement currently available is FACS, however, as a system it is exhaustive. FACS is restricted, as it relies on the skill level and experience of the coder.

A hinderance to the common practice of FACS is the amount of time it requires. Over 100 hours of study and practice are necessary to achieve minimal competency as a FACS coder. Moreover, when coding, a single minute of video takes approximately an hour to score. Currently, there are a limited number of qualified FACS coders, hence a limited number of opportunities and situations for which FACS can be applied. Such factors can hinder how FACS can be utilized in research.

Computer science and psychology experts are presently collaborating on a method of automating facial measurement. There has been significant progress over the last ten years in designing computer software systems that attempt to identify human facial expressions. The development of an automated Facial Action Coding System would greatly enhance the use of FACS as a practical and effective measurement tool. Since an automated version of FACS would not be dependent on the level of experience of the individual coding the videotape, the consistency, precision, and speed of coding could be improved. Additionally, FACS may play a vital role in the advancement of artificial intelligence, aiding in the development of the emotional recognition in robotics.

The majority of the systems developed focus on recognizing universal emotions such as enjoyment, surprise, anger, sadness, fear, and disgust. This aligns with the work of Darwin (1872), and currently Ekman and Friesen (1976), Ekman (1993), and Izard and colleagues (1983), who presented the concept that facial expressions are correlated to basic universal emotions.

Various studies have been conducted by Bartlett (1999) and Donato (1999) Cohn (1999) and Lien (2000) along with their colleagues, to test the automatic recognition of FACS upper and lower face Action Units (AU). By manually aligning pictures of faces free of motion, they were able to use image sequences when coding. The computer success rate in identifying single AU’s and select simple AU combinations in these images ranged from 80 to 95 percent accuracy. However, the process of extracting accurate information from video is an arduous task.

Using computer systems or artificial intelligence for FACS coding is complicated because it is based off a database of images and information, and often especially with current developments those databases are based off of images created in the past which have a limited range and lack diversity. Therefore, it may have less information on what facial expressions look like with different genders and ethnicity groups if the data set is primarily using images if Caucasian subjects to represent the facial expressions of emotion. This could become problematic if these systems were used for example in airport security, because the database would have less images to reference, as it is likely that individuals not represented in the database would be disproportionately flagged. There are also limits and concerns regarding aspects like individual differences (scars, plastic surgery, face coverings) and factors like lighting and camera angles, along with ethical and privacy concerns, that make automating FACS coding quite challenging.

The time required to apply FACS could be decreased radically if two primary components were improved. First, if a computer network with competent knowledge of the components of the observational principles of facial coding in FACS was developed (Rowley, Baluja, Kanade, 1998; Bartlett, Ekman, Hager, and Sejnowski, 1999).

Secondly, for that system to advance to a point that it could reliably recognize all conceivable changes across any face. Emotion is often communicated through subtle facial expressions; therefore, for automated computer systems to effectively detect these discreet changes, further development of a much larger database is required to achieve optimum results (Cohn, Kanade, Tian, 2000).

Such procedures should become available within the next decade, leading to a significant rise in research conducted regarding spontaneous facial expression.

FACS Summary

The Facial Action Coding System (FACS) is universally recognized, as a reliable measurement tool. Being able to recognize facial expressions is critical in understanding human emotion and nonverbal communication; this form of emotional expression surpasses race, religion, culture, economic status, gender and age.

Unlike other facial measurement systems, FACS measures all facial movement rather that those selectively linked to emotions, which provides a more thorough evaluation. FACS as a system of measurement is free of biases. It can be applied selectively to interpret specific facial expressions connected to emotion, or it can be used as a method of collecting data to describe intricate details of all observable facial behavior.

FACS has a comprehensive ability to identify the morphology (the specific facial actions which occur) and dynamics (duration, onset, and offset time) of facial movement. A significant advantage of using FACS for facial analysis is that it can be applied without the subject’s awareness; it is unobtrusive.

The future development of an automated FACS tool, with the capacity of measuring facial qualities at a level of accuracy that is equal, or superior to a trained human FACS coder is necessary to support the anticipated progression of facial measurement in behavioral science and other fields.

Table of Contents

- History of Facial Expressions

- What are the Universal Facial Expressions?

- Individual Differences: How are Facial Expressions expressed?

- Self-Awareness and Facial Expressions: How are Facial Expressions Experienced?

- How are Facial Expressions Measured and Analyzed?

- Facial Action Coding System (FACS)

- Conclusion